How to Download All The Files & Assets From a Webpage by Just Running 1 Script in Dev Tools Console…

Time for some automated web scraping!

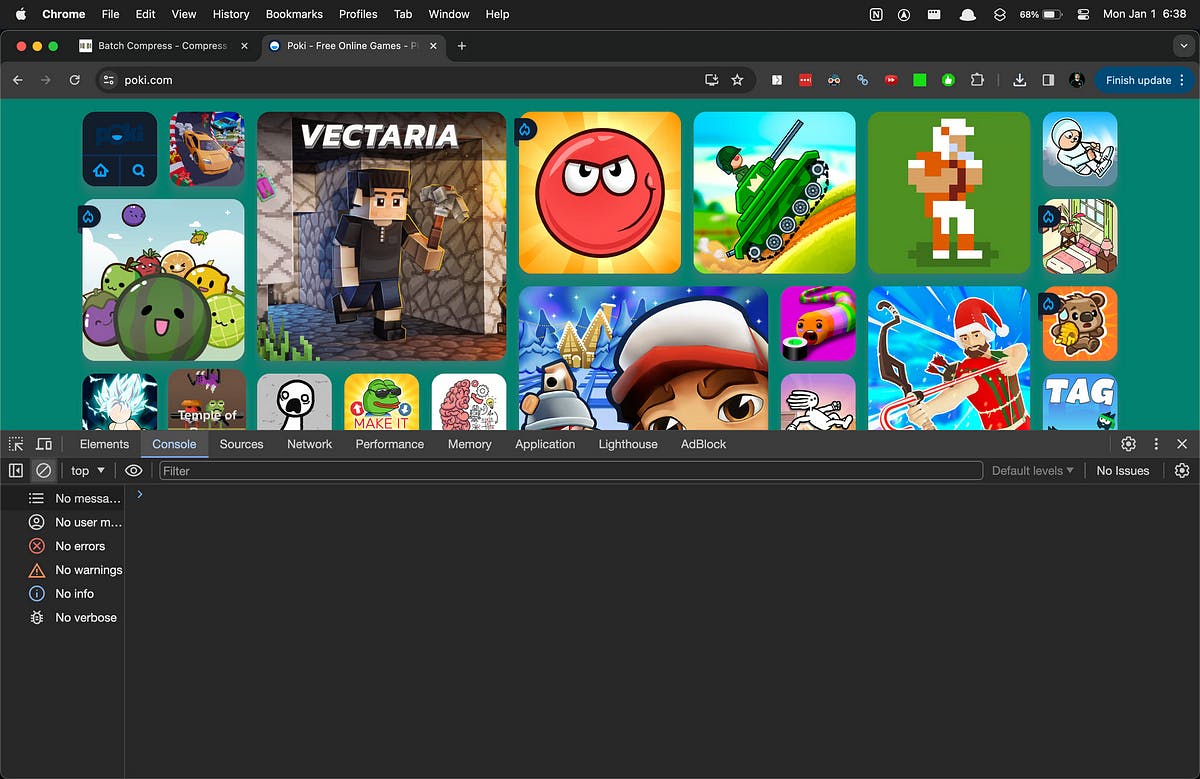

I’ll demonstrate on poki.com!

Step 1: Choose a website & open dev tools console

Next, open dev tools console.

To do this, right-click anywhere on the webpage and select “Inspect” or “Inspect Element”:

This will open the Developer Tools panel, where you can find the Console tab:

Step 2: Run this Script in theConsole

Once you have the Dev Tools Console open, paste in this script and hit Enter:

// Get all the URLs from the loaded resources

let urls = performance.getEntries().map(entry => entry.name).join('\n');

// Create a Blob with the URLs

let blob = new Blob([urls], {type: 'text/plain'});

// Create a download link and trigger it

let a = document.createElement('a');

a.href = URL.createObjectURL(blob);

a.download = 'urls.txt';

document.body.appendChild(a);

a.click();

document.body.removeChild(a);

The provided script performs the following steps:

- It collects the URLs of all the resources loaded on the webpage.

- It combines these URLs into a string with each URL separated by a new line.

- It creates a text file with the collected URLs.

- It generates a temporary download link for the text file.

- It triggers the download of the file by simulating a click on the download link.

AKA it extracts the URLs of the loaded resources, saves them in a text file for later use.

Like this:

And you’ll end up with something like this:

Next, Run the provided Bash script

- Open your “Terminal”

- Paste the code below and press enter

#!/bin/bash

# Navigate to the desktop

cd ~/Desktop

# Create 'assets' directory

mkdir -p assets

# Move 'urls.txt' into 'assets' directory

mv urls.txt assets/

# Change to 'assets' directory

cd assets

# Use wget to download files from the URLs

wget -i urls.txt

The provided Bash script performs the following steps:

- It navigates to the desktop by using the cd command followed by the path ~/Desktop.

- It creates a directory named “assets” on the desktop using the mkdir -p command.

- It moves the file “urls.txt” into the “assets” directory using the mv command.

- It changes the current working directory to the “assets” directory using the cd command followed by the path assets.

- It uses the wget command with the i flag to download files from the URLs listed in the “urls.txt” file.

The script creates a directory named “assets” on the desktop, moves the “urls.txt” file into that directory, changes the working directory to “assets”, and then uses wget to download files from the URLs listed in “urls.txt”.

The provided instructions and scripts will download all the files and assets from the webpage that you choose and open in the browser.

This includes all the resources loaded on that specific webpage, such as images, scripts, stylesheets, and other files.

💡 Note: You might wanna slow it down juuuust a tad 😅

What you end up with?

Well, all the resources loaded on that webpage — from images, to code files like HTML, CSS, JS, and more!

Cheers & happy automating!